Event date:

May

25

2021

5:30 pm

Distilling Knowledge in Multiple Student Networks using Generative Adversarial Networks (GANs)

Supervisor

Dr. Murtaza Taj

Student

Muhammad Musab Rasheed

Venue

Zoom Meetings (Online)

Event

MS Thesis defense

Abstract

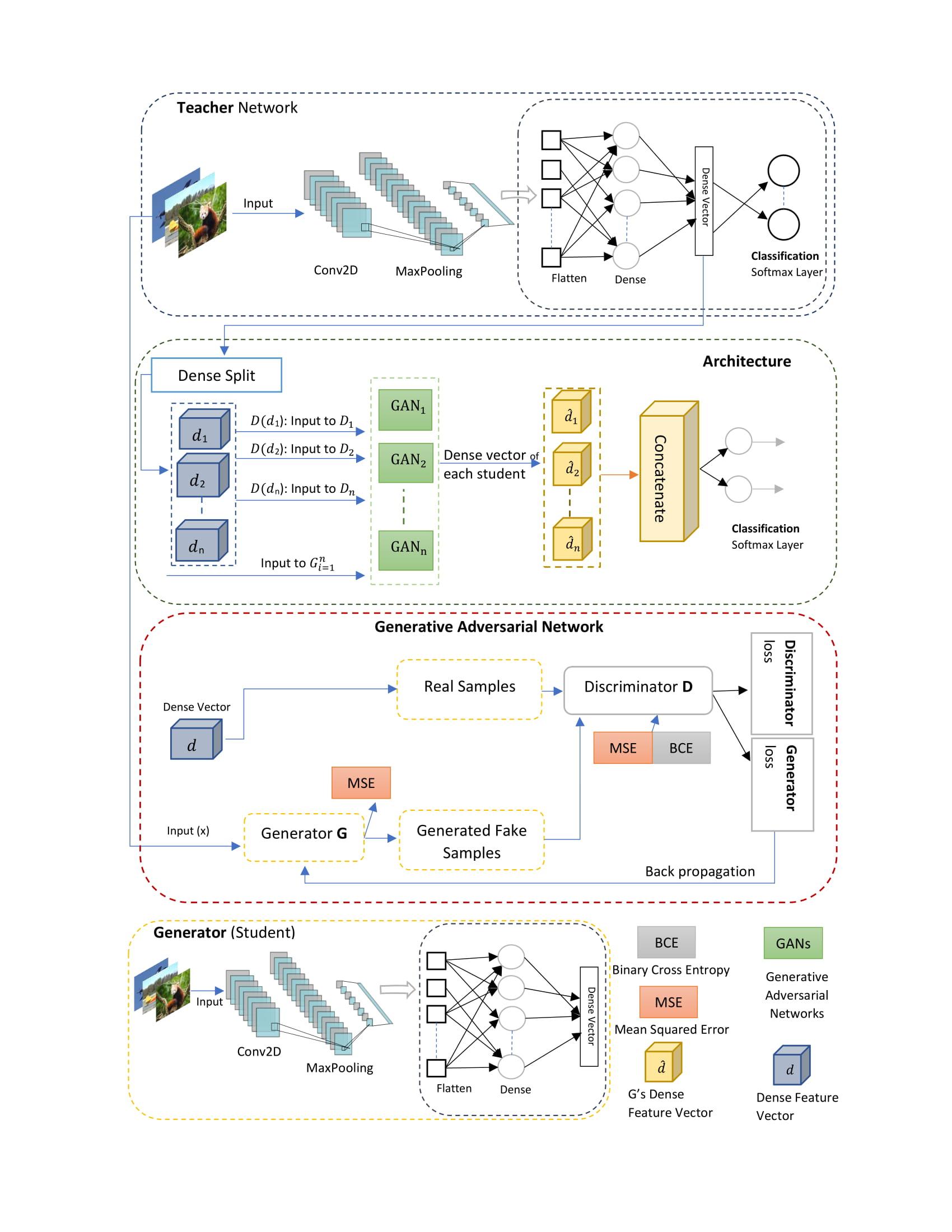

Due to the large size of deep convolutional networks, models cannot be deployed on edge devices. To compress the deep neural networks, knowledge distillation is an approach where knowledge is transferred to the smaller network (student) by training it on a transfer-set produced by larger network (teacher). The performance of teacher-student methodology was further improved by using multiple students instead of using single student network, where each student tries to mimic a part of dense representation learnt by teacher network and tries to minimize the reconstruction loss. Convolutional neural networks are not good at generating features or minimizing the reconstruction loss. To address this problem, there are many generative models such as variational auto encoders (VAE) or generative adversarial networks (GANs). In the proposed methodology we used GANs framework which are better at minimizing reconstruction loss then convolutional neural networks and used them for transferring knowledge to multiple student networks. We introduce novel loss function which is mean squared error along with the adversarial loss of GANs. This not only stabilizes the GANs while distilling knowledge in smaller networks but also helps in learning true data distribution. We evaluated on several benchmark datasets i.e., MNIST, FashionMNIST and CIFAR-10. Our approach has improved the accuracy over state-of-the-art methods and reduced the computation by compressing the network parameters.

Zoom Link: https://lums-edu-pk.zoom.us/j/96074049125

Meeting ID: 9607 4049 125